Scrappy Team of 6 Builds Healthcare's AI Future and Lands Big Bucks

How I worked with AWS & Anthropic to help architect and steer an entire organization's AI, product design, and funding trajectory

The Challenge: Support Drowning in Its Own Success

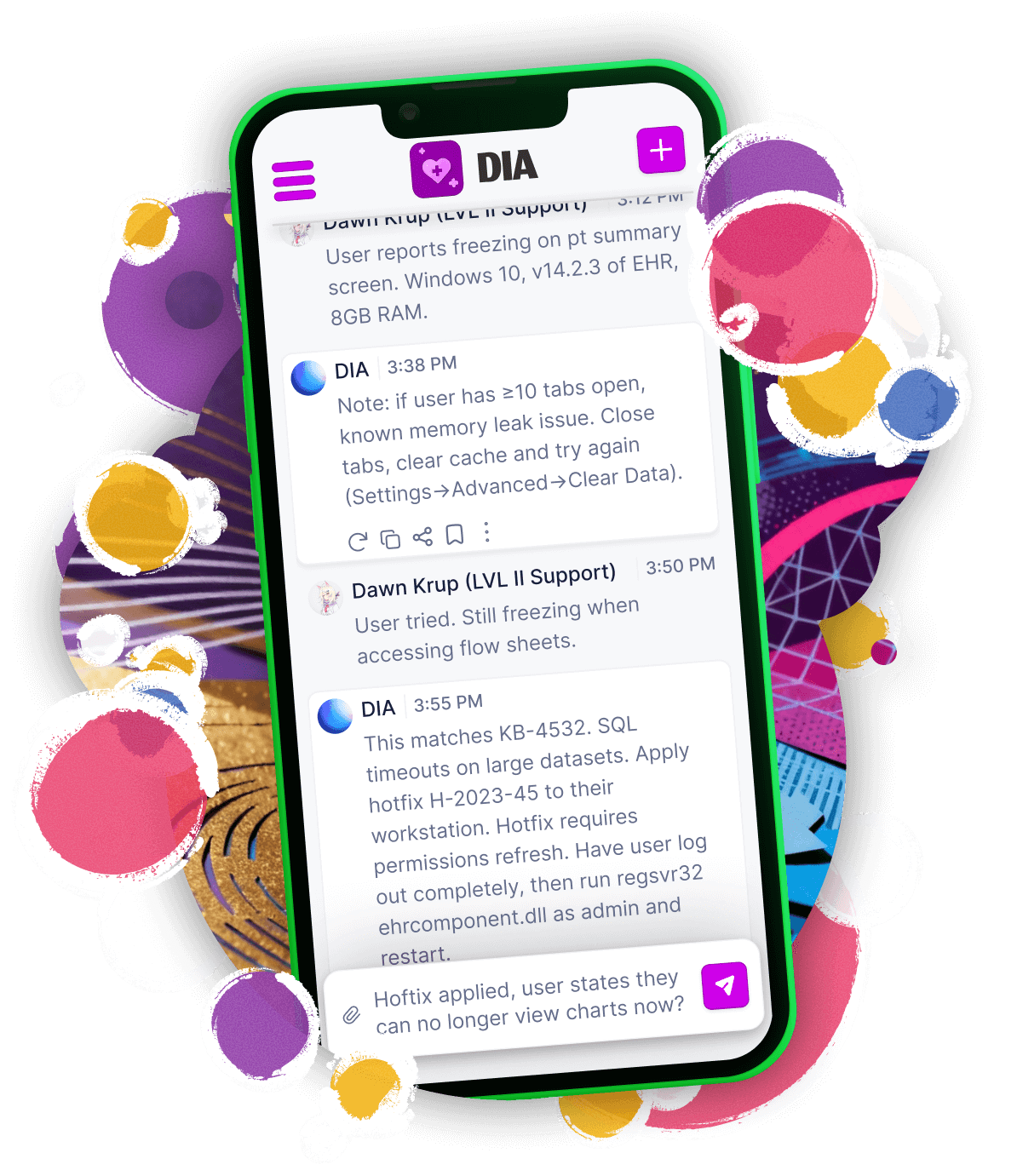

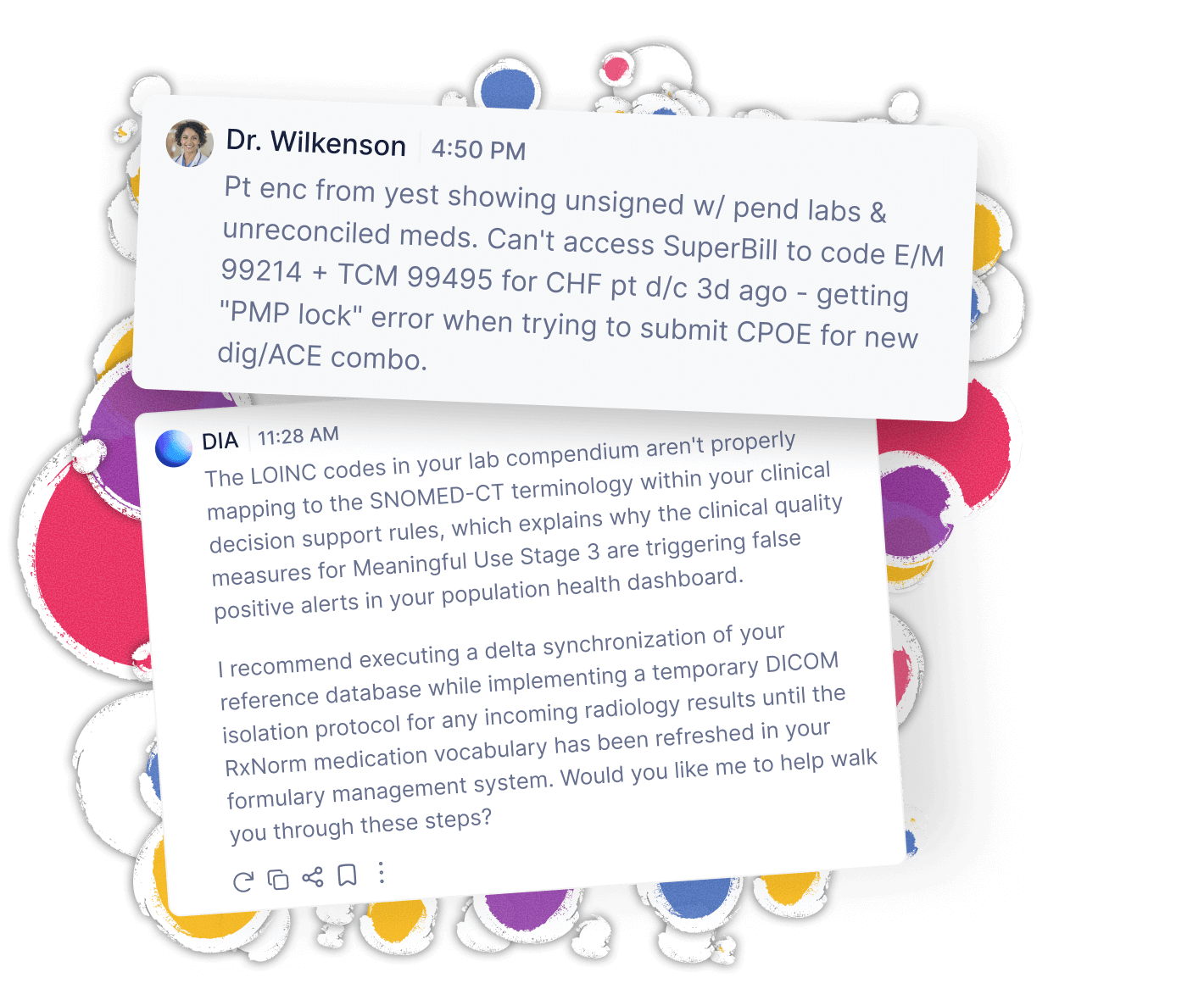

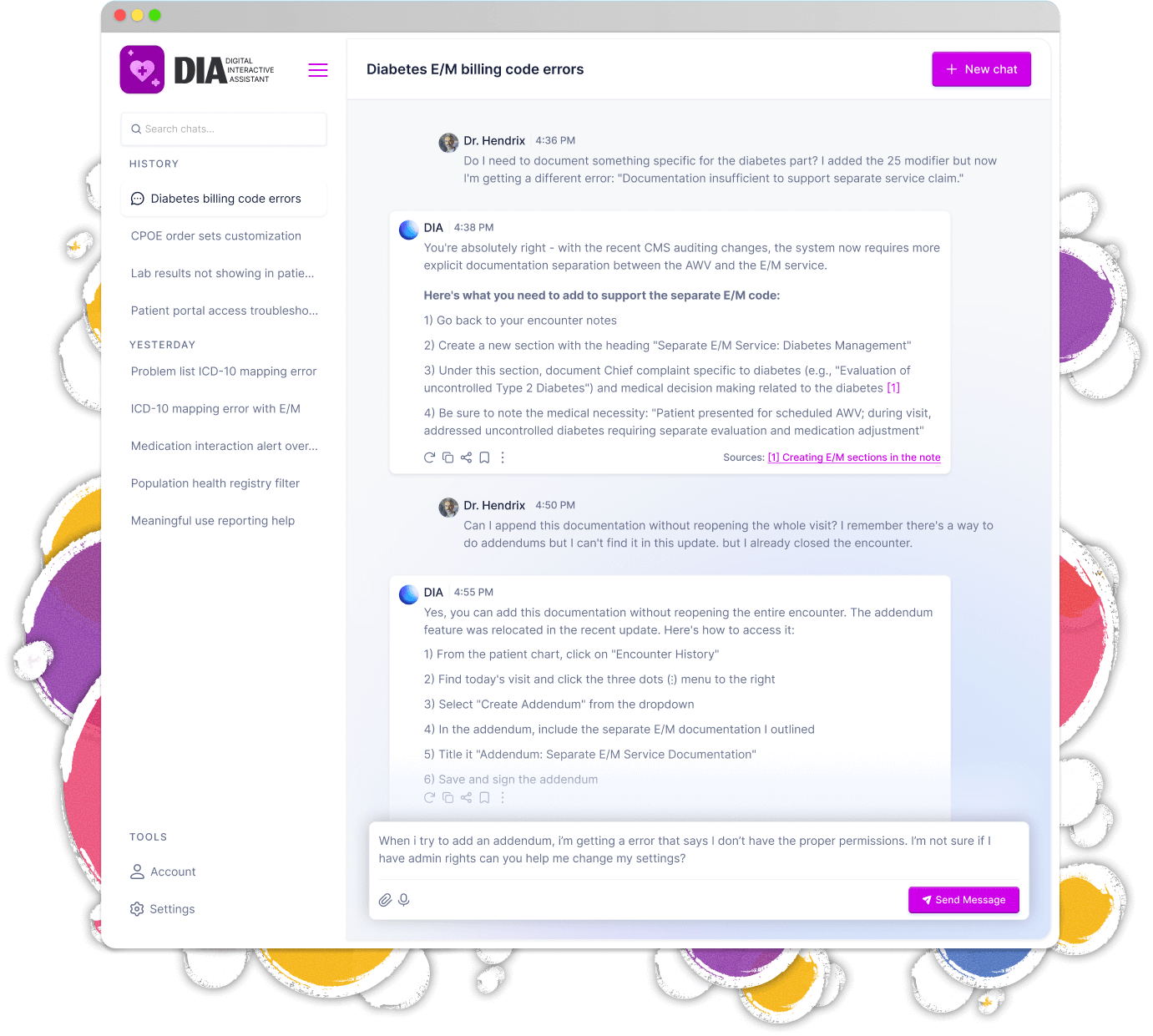

Healthcare providers trying to document patient visits were hitting EHR errors and waiting 24 hours for support responses. Many times, these answers could be found within minutes and users could fix their concern and move on. If you knew where to look. But a tangled web of documentation for various platforms crafted over decades made this nearly impossible.

This was the reality for thousands of providers using our enterprise EHR platforms. Our Level 1 support team was drowning in complexity - medical jargon met software intricacies, creating a perfect storm of frustration. Support tickets piled up, and our Salesforce metrics painted an ugly picture.

But we had 15,000+ support documents spanning over a decade, covering everything from legacy EHR systems to modern patient portals, revenue platforms, regulatory, etc. The knowledge existed - it just wasn't easily accessible. Like, at all.

This wasn't just about fixing support though. This was Greenway's first-ever AI initiative. A proof of concept that would fundamentally reshape our entire product roadmap. The stakes were massive: get this right, and we'd unlock millions in PE funding while transforming into an AI-first healthcare company. Get it wrong, and we'd remain stuck in the past while competitors raced ahead.

Industry

The Stakes

My Role

Core Challenge

Our Approach: Move Fast, Break Things, Build the Future

We assembled a scrappy team of six - cherry-picked from UX, engineering, data, and platform teams. This wasn't your typical corporate project with endless meetings and approval chains. Our Chief Product & Technology Officer became our biggest champion (after some initial skepticism), bulldozing roadblocks so we could move at startup speed inside an enterprise org that was used to moving much slower.

The mission was a three headed monster to be tamed:

- Prove AI could transform our business - Success here would unlock millions in PE funding and pivot our entire product roadmap to AI-first development

- Build something that understood healthcare context - A system that could parse the complex relationships between clinical workflows, billing processes, our software platforms, and the technical documentation that supports them

- Ship it for our major conference - We were scheduled to demo this live at a huge upcoming event. Nothing like a public deadline to focus the mind and kick the public speaking jitters into overdrive

The Technical Foundation

We started with GPT-3.5 for proof of concept, then pivoted to Claude Instant, and finally landed on Claude Haiku. The combination of speed, accuracy, and direct access to Anthropic's research team through AWS made the decision clear. This wasn't just tool selection - we were essentially auditioning partners for what would become a multi-million dollar AI transformation. AWS won, and they're now investing their own resources into our success.

Our retrieval-augmented generation pipeline was designed on AWS Bedrock and supporting AWS services:

- Amazon OpenSearch Service → Vector similarity search to retrieve relevant answers despite the quirks of healthcare-specific language

- Titan Embeddings (via Bedrock) → Encoded our mix of medical and technical terminology into dense vector space for accurate retrieval

- Amazon Kendra → Added natural-language search over unstructured documentation, improving recall for FAQ-style and loosely structured content

- Amazon S3 → Storage for 15+ years of processed support documentation

- Amazon DynamoDB → Maintained conversation and session state across multi-turn interactions (we migrated here from RDS for scale and performance)

As we didn't have the time to allow for pre-training of the model or building post-reply evals to supplement the RAG pipeline, the secret sauce was structuring the system prompt to help mitigate worries of abuse or hallucinations. It also had to be modular and expandable. One prompt architecture that could adapt across multiple platforms - from EHRs to billing systems to regulatory reporting tools. Think of it as a Swiss Army knife for healthcare support.

Prompt Engineering: Teaching AI to Speak Healthcare

Working directly with Anthropic's research team (thanks to our AWS partnership), I crafted prompts that could navigate healthcare's linguistic minefield. We're talking about a domain where "discharge" has multiple meanings, where SOAP notes aren't about hygiene, and where terminology precision matters. Some key prompt engineering strategies I implemented:

XML Tagging and Structure

LLMs love the structured format and it helps to keep them on the pathChain-of-Thought Reasoning

Slowing the model down using </thinking> tags and internal monologueExplicit Roles and Guardrails

Modularity and sensitivity for multiple platforms and sensitive healthcare topicsIdeal LLM Reply Examples

Showing models exactly how to output their work is an under rated protipGeneral Drift Prevention

Techniques to keep responses grounded and factual. Avoiding negations is hugePlot Twist: When Documentation Moves Faster Than Documentation

Being Greenway's first AI project meant we were literally building the plane while flying it. AWS's documentation still referenced their old SageMaker platform while we were pioneering on Bedrock. Some days it felt like assembling IKEA furniture with stereo instructions.

The confidence problem: When the AI was wrong, it was confidently wrong. Evaluating response accuracy became our biggest challenge. Healthcare + AI = zero margin for error when patient care is involved.

The CSS adventure: With our conference deadline looming and the dev team slammed, I dusted off my decade-old front-end skills to add some polish to the UI. Limited access to the full codebase meant MacGyvering a fully responsive interface using some crafty CSS pseudo-elements the sticky footers and slide-out chat history panels. All crafted in a Chrome browser window by passing style snippets back to the DEV building the UI. I'll admit, not exactly production ready by any means but sometimes the best solution is the one that ships.